During my graduate studies we discussed his article. While Carr raises several valid points, I concluded that he misses the mark. I maintain that IT is a strategic asset. IT only becomes a commodity when viewed and treated as such.

IT is a strategic asset. It only becomes a commodity when treated as such.

Carr frames IT in the traditional definition of a commodity: “A basic good used in commerce that is interchangeable with other commodities of the same type.”[2] In this paradigm, determining your IT strategy is virtually no different than deciding which electric company to power your infrastructure. And as Carr states, “Today, no company builds its business strategy around its electricity usage.” You simply want the cheapest, most reliable provider.

However, strategy is concerned with how an organization seeks to achieve its goals.[3] If an organization seeks to leverage their IT to gain a competitive advantage, then IT becomes a strategic asset. If IT is relegated to only supporting the organization’s strategy, then Carr is correct, IT becomes a commodity.

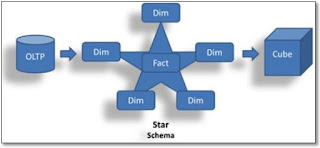

Organizations with the commodity mindset focus almost exclusively on what Andrew McAfee categorizes as Function IT (FIT) and Network IT (NIT).[4] These types of IT include are generally common components such as desktop applications, communication software, and collaboration tools. Organizations that view IT as a strategic asset incorporate McAfee’s third category: Enterprise IT (EIT). These are large-scale integrated systems that design or redesign business processes.[5]

EIT can be seen commonly in ERP, SCM and CRM systems, but can be manifest in any enterprise system that seeks to improve or redefine core business functions. EIT requires careful planning and oversight by senior management to ensure the systems and processes align with business strategy and objectives. The risk can be high, but the return can be even higher.

When EIT systems are viewed as a commodity, disastrous consequences can arise. Consider Auto Windscreens, the UK’s second largest auto glass replacement company. Auto Windscreens used “Oracle On Demand” for call center applications and back-office systems, including their inventory management. In 2010, Auto Windscreens felt that a similar system at a lower price could be achieved with Metrix. In other words, the ERP system was viewed as commodity, i.e. virtually the same product but with a differing price. But delays and problems with the new system, coupled with lower revenues, forced the company into bankruptcy.[6]

Conversely, there are organizations that understand IT can be a strategic asset. Insurance giant MetLife had been struggling for years to create an integrated customer view. Seventy separate systems required call center reps to have 15 different screens open. Some customer service processes required 40 clicks.[7] In response to the challenges, MetLife developed a home-grown app dubbed “The Wall.” It provides reps with single-screen, single-click functionality. This innovative application utilizes big data tools to query data from disparate systems.

As the two examples demonstrate, IT does matter. In a world were IT is ubiquitous, it is easy to treat IT as commodity much like electricity. But this is a one-size-fits-all approach which results in IT not being leveraged as a strategic asset for competitive advantage.

Continuing with the electricity analogy: When your organization is still dependent on electricity, but your competitor implements hydrogen cells for clean and cheap power, you might find yourself at a competitive disadvantage.

References:

1. Carr, Nicholas G. 2003 May. “IT Doesn’t Matter.” Harvard Business Review. https://hbr.org/2003/05/it-doesnt-matter *

2. INVESOPEDIA. “Commodity”. http://www.investopedia.com/terms/c/commodity.asp . Accessed December 8, 2015.

3. Brown, Susan. 2015. “Module 1: Strategy.” Lecture. The University of Arizona.

4. McAfee, Andrew. November 2006. “Mastering the Three Worlds of Information Technology.” Harvard Business Review.

5. Brown, Susan. 2015. “Module 2: Funding IT.” Lecture. The University of Arizona.

6. Krigsman, Michael. 2011 February 16. “1100-person company goes bust: IT failure or mismanagement?” ZDNet. http://www.zdnet.com/article/1100-person-company-goes-bust-it-failure-or-mismanagement/

7. Henschen, Doug. 2013 May 5. “MetLife Uses NoSQL for Customer Service Breakthrough.” InformationWeek. http://www.informationweek.com/software/information-management/metlife-uses-nosql-for-customer-service-breakthrough/d/d-id/1109919?page_number=1

* [Excerpt from article] “IT is best seen as the latest in a series of broadly adopted technologies that have reshaped industry over the past two centuries — from the steam engine and the railroad to the telegraph and the telephone to the electric generator and the internal combustion engine. For a brief period, as they were being built into the infrastructure of commerce, all these technologies opened opportunities for forward-looking companies to gain real advantages. But as their availability increased and their cost decreased — as they became ubiquitous — they became commodity inputs. From a strategic standpoint, they became invisible; they no longer mattered.” -- Nicholas Carr